An Evaluation of the Job Landscape and Trends in AI Ethics

An Evaluation of the Job Landscape and Trends in AI Ethics

RESEARCHERS

Quentin J Louis, Joshua Walker, Katherine Lai, Victoria Matthews, Kitty Atwood

PRINCIPAL INVESTIGATOR

Susanna Raja

DESIGNER

Ayomikun Bolaji

Contents.

Executive Summary.

Top 3 Recommendations.

Trends in AI and Ethics.

Although a contested and evolving term that is often used generally to describe technological revolution in society, AI can be defined as technology that can independently perform complex intelligent tasks, which were previously limited to human cognition.

(The Global Landscape of AI Ethics Guidelines, 2019;

A Unified Framework of Five Principles for AI in Society, 2019)

The explosion of interest in AI technology and discourse has been reflected in a growing hiring trend in AI-related jobs.

In Indeed’s ‘The Best Jobs in the US: 2019’ editorial, 3 of the top 15 jobs were AI roles.

(Indeed, 2019)

The highest ranked of these–Machine Learning Engineer–was the highest positioned of all US jobs, with a 344% growth in the number of job postings for the role between 2015-2018.

(Indeed, 2019).

The growth of AI has led to considerable debate surrounding issues such as the lack of accountability, discriminatory bias and malevolent use of AI systems. (The Global Landscape of AI Ethics Guidelines, 2019)

In response to this, there has been a growth in AI ethics initiatives which aim to create a framework for developing standards for AI implementation across different sectors and industries. (A Unified Framework of the Five Principles for AI in Society, 2019)

There remains considerable disagreement over what constitutes ethical AI. (The Global Landscape of AI Ethics Guidelines, 2019).

In response to the confusion between definitions, meta-analyses have been carried out to characterise common key principles of AI ethics. (AI Now 2019 Report)

A Unified Framework of the Five Principles for AI in Society, 2019 establish five key principles that are common amongst published guidelines:

These themes are also increasingly adopted by the private sector, which has seen a growing adoption of AI principles since 2015. (The Global Landscape of AI Ethics Guidelines, 2019)

2018 has been identified as a turning point for corporate AI ethics initiatives, with many high profile companies such as Google, Facebook and IBM publishing ethics guidelines at this time. (AI Index 2021 Report)

Increased adoption of ethics principles corresponds to an increased ethical awareness of employees;

A survey of nearly 900 executives across different sectors in 10 countries in 2020 found that:

accounting to respective increases in awareness of 46%, 33% and 30% from 2019.

(Capgemini: AI and the Ethical Conundrum, 2020)

Deloitte’s third ‘State of AI in the Enterprise’ report surveyed nearly

3000

executives of firms and organisations that have adopted AI approaches, finding that 95% of respondents were concerned about ethical risks of AI (Deloitte: State of AI in the Enterprise, Third Edition, 2020).

Survey results also suggest that there are a growing number of job roles which explicitly aim to address the question of AI ethics;

For example, Capgemini found that

63%

49%

% of IT and AI data professionals

% of sales and marketing executives

surveyed could point to a management role responsible and accountable for ethical AI issues.

(Capgemini: AI and the Ethical Conundrum, 2020)

New job titles such as ‘Chief AI Ethics Officer’ and ‘AI Ethicist’ are emerging, although AI ethics is often corporated into other executive roles, such as CDO and CAO (Are you asking too much of your Chief Data Officer? by Davenport and Bean, 2020; Forbes: Rise of the Chief Ethics Officer, 2019).

Public Awareness and High Profile Examples of Corporate AI Ethics.

Alongside increased corporate awareness of AI ethics issues, public awareness has also grown. The 2021 AI Index report surveyed popular news topics, finding that themes such as AI ethics guidance, research and education were particularly popular, as well as ethical concerns related to facial recognition software and algorithmic bias. (2021 AI Index Report)

However, rising public awareness raises the concern that firms may act performatively to include AI ethics roles in a form of ‘ethics-washing’, while neglecting the necessary integrated, structural change.

(IBM: Everyday Ethics for Artificial Intelligence)

Many high profile roles have gained attention, such as the Bank of America’s ‘Enterprise Data Governance Executive’ and Google’s Ethical AI team.

(Schrag, 2018)

Concerns that firms are using these roles simply to comply with ethical obligations peaked when Google dismissed two members of its Ethical AI team following a report discussing AI bias. (Naughton, 2021)

The 2021 AI Index reveals that this event received particular attention in the media, naming it the second most popular news topic related to ethical AI after the release of the European Commission’s white paper on AI. (2021 AI Index Report)

These high profile cases suggest there may be a conflict between the actions driven by the increasing pressure for firms to adhere to ethical guidelines, and the change needed to ensure truly ethical practice.

Key Issues in AI Ethics.

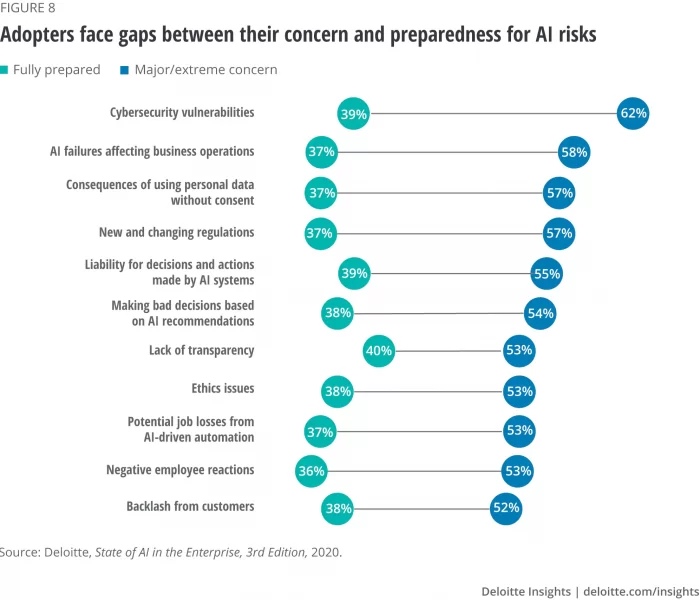

Despite increasing acknowledgement of the importance of ethics, there remains disparities between risk perception and preparedness;

For example, in Deloitte’s 2020 survey, 53% of executives perceived ethical issues as a major or extreme concern, but only 38% of respondents were fully prepared to address these risks.

This alarming gap between risk recognition and preparedness reflects the adoption of ‘soft law’ approaches which are persuasive and non-legislative.

(The Global Landscapes of AI Ethics Guidelines, 2019)

This approach has been criticised for its lack of consideration of practical implementation.

(AI Now 2021 Report, 2019).

The proliferation of soft policy approaches not only neglects practical implementation, but also often neglects geographical and demographic bias in the development of ethical guidelines (The Global Landscapes of AI Ethics Guidelines, 2019).

Many guidelines and studies originate from largely white, Western institutions, leading to the exclusion of underrepresented groups (AI Now 2021 Report, 2019).

There is thus a demand for policy and guidance to be diversified and for more practical approaches within corporations to be developed simultaneously in order to translate general ethical guidelines into internal structures and practices.

Currently, adoption of ethical AI principles in the private sector has been heterogeneous between corporations, and reports have suggested reluctance to admit AI ethics as a corporate issue (IBM: Everyday Ethics for Artificial Intelligence, 2020.)

For example, IBM’s survey of executives of companies using AI across varying sectors found that most named external factors such as tech companies, governments and customers as having the greatest influence on AI ethics, rather than factors the firms themselves claimed responsibility for.

A managerial approach to introducing AI ethics is evident within the private sector, yet established roles for ethical responsibility such as AI ethicist and Chief AI Ethics Officer have not been adopted across all firms.

(Capgemini: AI and the Ethical Conundrum, 2020)

Furthermore, there is a growing awareness that AI ethics should be introduced at all levels, not just via managerial positions.

Considering the evolution of AI ethics in and the continuing limitations of AI ethics within the private sector, the remainder of this report explores the current job market to identify the state of AI ethics roles.

Initial Market Scope.

As algorithms increasingly automate everyday activities performed by the individual across industries like:

HEALTHCARE

MARKETING

TRANSPORT

FINANCE

INSURANCE

MANUFACTURING

SECURITY & DEFENCE

AGRICULTURE

PUBLIC SECTOR

it is increasingly apparent that to consider AI and its ethical ramifications is no longer simply the prerogative of Software and IT firms.

Decisions that are made using algorithms impact nearly every aspect of our lives.

By retrieving information about an individual, AI is used to assess the likelihood of individuals’ reoffending, for example, by the COMPAS system in US legal courts, to determine individuals’ eligibility for insurance, education and recruitment, to enact predictive policing and, more insidiously, even to surveil citizens via facial recognition.

When such algorithms are coded with bias or using ‘black boxes’ that obscure their justifications for the decision-making to which they contribute, AI bears the risk of disenfranchising members of a society.

AI’s considerable social, political and economic influence is such that jobs at the intersection of AI and ethics demand an interdisciplinary approach.

Fostering multistakeholderism and a diversity of opinion is necessary to mitigate the risk of issues facing AI, such as algorithmic bias and lack of transparency.

In the past 5 years, a substantial amount of research energy has been devoted to establishing AI ethics principles.

AlgorithmWatch’s AI Ethics Guidelines Global Inventory compiled 160 documents by various companies and organisations all setting out AI principles and guidelines. (Thümmler: 2020)

The Berkman Klein Center’s white paper identified 36 prominent documents that set out ethical approaches to AI principles between 2016 to 2019 alone.

(Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI, 2020)

The Berkman Klein Center study speaks to the significant breadth of job sectors within which research into ethical AI principles has been undertaken: within governmental institutions; inter-governmental organisations; civil society; private sector companies; advocacy groups and multi-stakeholder institutions.

The Berkman Klein Center report does not simply evidence the breadth of job sectors incorporating ethical approaches to AI. It also shows that, as early as 2016-2019, there was an awareness within these sectors regarding the need for cross-sector dialogue in order to practice AI ethics.

64% of all the approaches to AI principles emphasised the importance of multi-stakeholder collaboration, defined as: ‘encouraging or requiring that designers and users of AI systems consult relevant stakeholder groups while developing and managing the use of AI applications’ (Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI, 2020).

IBM’s ‘Everyday Ethics for AI’ report (2019) recommended cross-sector dialogue between AI developers, policymakers and academics to encourage a diversity of perspectives within AI systems.

Access Now’s ‘Human Rights in the Age of AI’ (2018) advised that private-sector actors should assess AI systems by collaboration with external human rights organisations as well as human rights and AI experts.

The emphasis in these reports on the need for multistakeholderism in developing ethical AI, particularly across job sectors, is reflective of a wider trend in AI Ethics scholarship to herald a move ‘from principles to practice’ (European Parliamentary Research Service, 2020).

As the 160 ‘AI principles’ documents compiled by AlgorithmWatch and the 36 compiled by The Berkman Klein Center respectively show, the AI ethics research landscape contains a proliferation of theoretical approaches to the subject.

Moving to apply these principles in practice, the current rhetoric in discussions of ethical AI marks a shift to focussing on the ways in which ethics can be more technically incorporated into technology.

This rhetoric advocates for the worth of roles that align theoretical research about ethics and/or AI with practical initiatives.

Due to the constraints of time, DataEthics4All has focused on two key practical sectors that are tending to work collaboratively with ethical theorists and researchers across traditional occupational boundaries:

In order to move ‘from principles to practice’ in terms of advancing AI ethics, AI policy experts are adopting more collaborative approaches to integrate ethical perspectives into governance.

The European Parliamentary Research Service recommendations (2020) particularly champion the benefits of this collaboration, using seven key insights from theoretical ethics and technology research as the framework for their AI policy recommendations.

The recognition that the issues tackled in AI policy roles necessarily involve or should involve AI ethics is evident even in 2019.

DataEthics4All has identified that all 5 of the ‘Emerging and Urgent Concerns in 2019’ as listed in the AI Now 2019 Report are characterizable also as issues of ethical concern (AI Index 2019 Report).

THE 5 CONCERNS READ AS FOLLOWS:

Similarly aligning principles of ethics and practical governance, the Public Policy Programme at The Alan Turing Institute utilises the Data Ethics Framework as a practical tool to support their policy direction (Understanding Artificial Intelligence Ethics and Safety, 2019).

This approach to AI Ethics is often alternatively characterised by phrases such as ‘embedding values in design’; ‘value-sensitive design’ and ‘ethically aligned design.’

The trend towards favouring proactive ethics in respect of AI has coincided with the emergence of an approach to AI called Ethics by Design.

Ethics by Design as defined in AI Ethics is ‘a key component of responsible innovation that aims to integrate ethics in the design and development stage of the technology’ (Artificial Intelligence, Responsibility Attribution, and a Reasonable Justification of Explainability, 2020).

Traditionally, the jobs linked to the design and development stage of AI might include AI researchers, data scientists, engineers, data analysis roles, UX/UI designers and their associated positions.

Indeed’s 2018 research into the emerging trends in artificial intelligence evidences the pervasiveness of such design and development roles in the AI field (Jobs of the Future: Emerging Trends in Artificial Intelligence, 2018).

Out of all the jobs requiring AI skills as identified by Indeed in 2018, 94.2% were machine learning engineers and 75.1% were data scientists, evidencing a clear demand for such design and development roles.

In order to practice Ethics by Design, rather than simply having their design and development teams working as siloes, more organisations are encouraging their teams to collaborate with ethicists.

Microsoft recently created a specialised Ethics and Society team within their engineering function (Ethics by Design: An Organisational Approach to Responsible Use of Technology, 2020).

This allowed ethics experts to actively encourage engineers to embed ethical principles within AI at the early stage of development, thereby mitigating the risk of reifying ethical problems into their technology.

Similarly, Workday initiated a cross-functional AI Ethics Initiative task force to develop the company’s machine learning ethics, involving experts from sectors including: product and engineering, legal, public policy and privacy and ethics and compliance (Ethics by Design: An Organisational Approach to Responsible Use of Technology, 2020).

Though progress is still ongoing, the emphasis in the AI Ethics field on multi-stakeholder approaches, on policy that uses ethical principles and on ethics by design are likely to encourage a more collaborative AI job market.

If design and engineering functions increasingly operate alongside ethicists, this is likely also to bridge the gap between STEM subjects and their humanities counterparts.

In-depth Analysis of the Current Job Market.

To obtain a snapshot of the current AI ethics job market, data was downloaded from 80,000 Hours, a US-based organisation that focuses explicitly on ethical employment (80000 hours).

Available postings from 20/09/20 to 29/06/21 under the problem area ‘AI safety & policy’ were selected.

The job roles listed as AI safety and policy can be broadly characterised as roles in AI ethics, reflecting how the governance of AI is increasingly being wedded to AI ethics.

Research using an independent job board validated this link, showing that 12% of responsible technology job postings were classed as policy roles.

The independent job board used is the All Tech is Human ‘Responsible Tech Job Board’.

[visualizer id="13412" lazy="no" class=""]

Roles associated with AI Safety and Policy account for 17% of all listings on the 80,000 Hours database, the second most popular sector after global health and poverty, resulting in a total sample of 122 postings.

As 80,000 Hours is US-based, their job postings may have a English-speaking or US bias, so the results should not be accepted as representative of the global distribution of jobs in AI ethics.

Nevertheless, the US is a leading player in AI ethics so the dataset provides a useful insight into the current state of employment with an ethical focus within the AI sector.

Of all the published job postings, the US jobs market clearly dominated (accounting for 73% of total jobs) — more than all other countries combined.

The country with the second highest number of postings was the UK (10%).

Location of AI Safety and Policy Postings between 20/09/2020 – 29/06/2021

[visualizer id="13418" lazy="no" class=""]

The dataset is limited to job postings from September 2020 – July 2021 and thus should only be treated as a snapshot of the current jobs market.

In order to elucidate the various synonyms for AI Ethics, DataEthics4All conducted research into the vocabulary deployed in job descriptions that advertised roles involving AI ethics.

Previous attempts to categorise the terms used to refer to ethical and human-rights based approaches to AI were relatively sparse.

However, the Berkman Klein Center’ s 2020 analysis upon the 36 prominent documents that set out ethical approaches to AI principles between 2016 to 2019 was particularly fruitful.

The Center evidenced the eight key themes by which AI ethics was most consistently described.

(Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI, 2020)

Privacy

Human Control of Technology

Transparency & Explainability

Safety & Security

EIGHT KEY THEMES

Fairness and Non-discrimination

Accountability

Professional Responsibility

Promotion of Human Values

The DataEthics4All research, broadening sample size to 122, was based on using these broad thematic categories to investigate the sort of vocabulary used in job descriptions.

The data shows that the most frequent words used to describe AI Ethics jobs are ‘safety’ or ‘security’, suggesting that the AI ethicist role is in large part concerned with mitigating and apprehending possible security risks caused by AI.

However, this conclusion has various limitations, not least that we might reasonably expect a search for job descriptions containing either one of two words to reflect more results than that of one word.

Despite this limitation, however, ‘safety’ or ‘security’ clearly represents significantly more results than any of the counterpart searches that contained two words, such as ‘transparency’ or ‘explainability.’

Another possible limitation of our findings that cannot be so easily resolved is that the spread of our data, characterised by 80,000 Hours as ‘AI safety & policy’ roles, tends towards jobs that inherently involve ‘safety.’

This crucial limitation of the dataset makes it impossible to conclude that jobs about ‘safety’ or ‘security’ dominate the AI Ethics job market as a whole.

Despite these limitations, however, our data does illuminate the extent to which different vocabulary is used to refer to jobs in the field of ‘AI Ethics,’ suggesting a lack of fixed scope for such jobs that reflects the relative novelty of the field.

[visualizer id="13507" lazy="no" class=""]

[visualizer id="13521" lazy="no" class=""]

[visualizer id="13528" lazy="no" class=""]

[visualizer id="14411" lazy="no" class=""]

The analysis revealed the top three most common combinations of role types after ‘various different combinations’ to be:

Making up 28% of all combinations, the collaboration between engineering and research emerges as particularly significant in the AI Ethics field.

This collaboration reflects DataEthics4All’s findings regarding the increasing emphasis in the AI Ethics field on practicing Ethics by Design and incorporating ethical research into engineering.

While the 7% of combinations that were between policy and research roles is unsurprising given the traditional relation between these disciplines, the 9% of collaborations represented by engineering, policy and research is informative.

The collaboration would suggest that AI policy is being informed by experts in the mechanics of AI and perhaps even that the engineering of AI is apprehending possible future regulatory issues with the technology from the onset.

To corroborate these findings, additional research would however be necessary.

77% of job listings specified only 0-2 years experience, pointing to accessibility.

However, the data collected does not account for discrimination based on experience between candidates further within the recruitment process.

Similarly, only 24% of postings required a masters degree or higher, again highlighting the obtainability of roles within AI ethics.

[visualizer id="13995" lazy="no" class=""]

[visualizer id="13542" lazy="no" class=""]

RECOMMENDATIONS.

CONCLUSIONS.

AI remains a disruptive force at the forefront of technological research with huge potential to generate positive societal change. As the ethical risks of AI are further explored, there is increasing demand to address the corresponding potential for the technology to negatively impact society.

Accounting for ethical risks via the private sector is an essential step to increasing transparency and accountability and will enable the better adoption of AI across the corporate landscape and society at large.

Despite that an impetus for collaboration between disciplines and across job sectors is evident within the AI Ethics field, much remains to be done to ensure that the functions developing and designing AI are in constant dialogue with ethicists, policy-makers, researchers and other stakeholders.

Those that build and design the technologies that are becoming increasingly integrated into our social, political and economic interactions have a responsibility to ensure that AI reflects a diversity of perspectives.

More than simply collaborating across functions, the future of the AI Ethics field may require entirely new roles to be developed that better align design and development perspectives with ethical ones.

For, as Palm and Hansson identify, despite cross-sector collaboration, engineers are still themselves ‘seldom trained to discuss the ethical issues in a pre-emptive perspective’ (Palm; Hansson: 2006). The skills necessary to identify and conceptualise ethical issues are still, for the most part, the expected prerogative of applied ethicists: The European Parliamentary Research Service claims that it is ‘unlikely’ that the current data scientists or engineers possess them (2020)

The conclusion that emerges is that, if jobs do not yet exist that are recruiting those with the necessary diversity of skills and perspectives to combine technical design and development with ethical frameworks, there may be a presumed new role invented for such ethical engineers.

As a field that is evolving at such a rapid pace, it is reasonable to assume that AI Ethics and its associated job market will adapt to meet the challenge of technology that requires inherently collaborative, multi-disciplinary approaches.

DataEthics4All

JOIN US.

Keep in touch with the latest in Data Ethics, Privacy, Compliance, Governance and Social Corporate Responsibility.

Join Us